VIANOPS Introduces Next-Generation Monitoring Platform for AI-Driven Enterprises

From traditional ML models to LLMs, VIANOPS Monitors Drift, Data Quality, and Bias-Prone Models to Ensure Reliability and Performance, without the High Cost

By: Dr. Navin Budhiraja, Vianai Systems Chief Technology Officer & Head of VIANOPS Platform

High-Scale Monitoring, On-the-Fly Root-Cause Analysis, and Smarter Alerts – With Unparalleled Affordability

In just the last six months, there has been an explosion in the types of models enterprises want to deploy – to improve business processes, power better decision-making, increase customer and partner engagement, and improve employee productivity.

These AI-forward enterprises are companies where data science, machine learning, architecture, infrastructure, IT, and other teams are feeling immense pressure to incorporate new AI technologies within their organizations to support business users and outcomes, but who don’t yet have the tools to fully and reliably monitor them once deployed.

Models are notoriously prone to faltering once deployed to the real world due to the dynamic environment. Models are known to perpetuate bias and unfair practices. Newer types of models such as LLMs introduce new kinds of risks for their hallucinations, fabrications, and other ethical issues.

Once deployed, questions need to be answered (continuously):

-

- Is the model performing as intended, once deployed in the real world?

- Is the model drifting beyond the allowed threshold(s)?

- Is the model perpetuating some historical bias that is not acceptable?

- Are we able to see into the details, across extreme complexity and scale?

With that in mind, we expanded the capabilities of VIANOPS at a similarly rapid pace to meet the emerging and accelerating demands of AI-forward enterprises.

And we’ve made it available to try it free.

Try it Free

The latest release of VIANOPS is now available to try for free. Any ML engineer, data scientist, or other ML practitioner can see and test expanded capabilities, the simplicity and usability of the platform experience, and the ability to create custom plans unique to a particular team or company’s needs.

Users can sign up free directly, or learn more here about our free trial offering before signing up.

Robust, High-Scale Monitoring

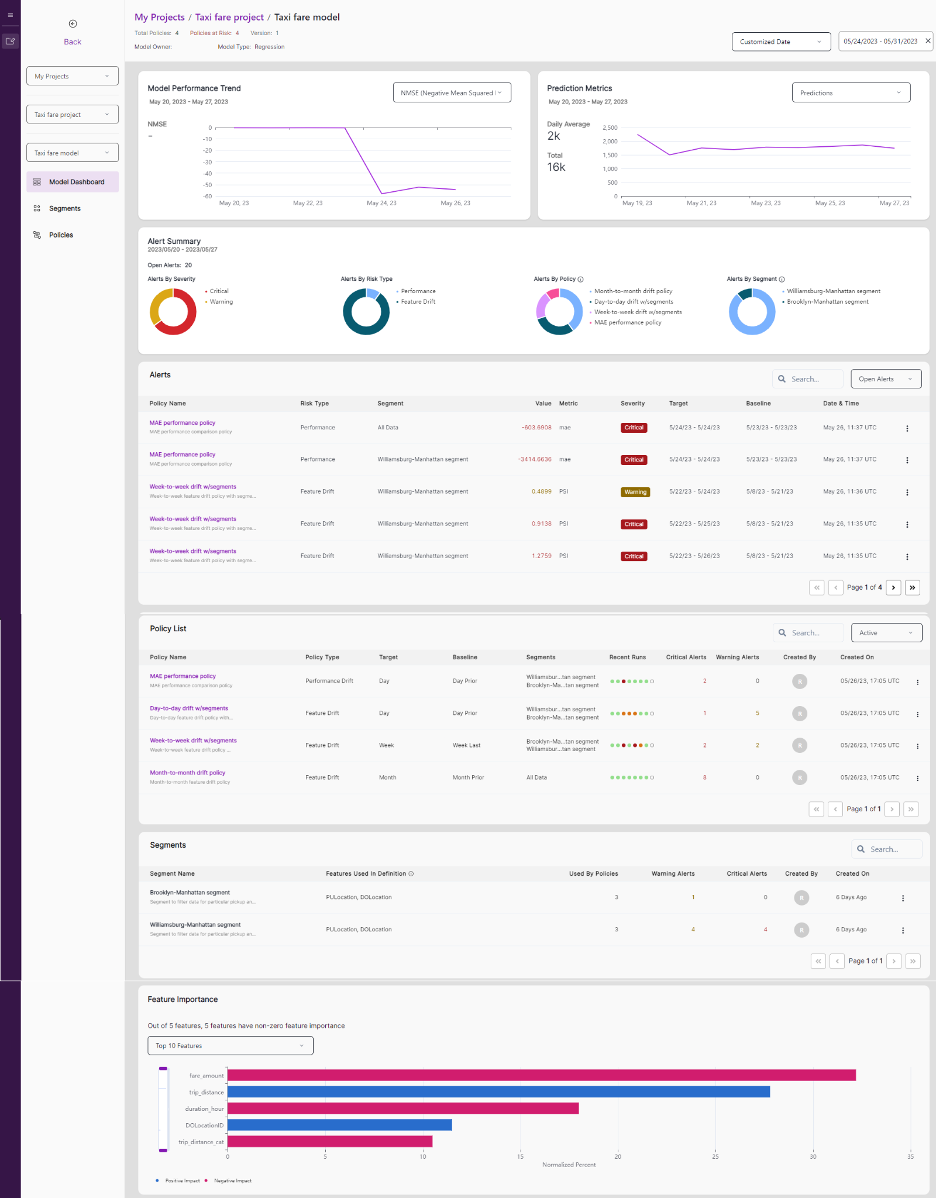

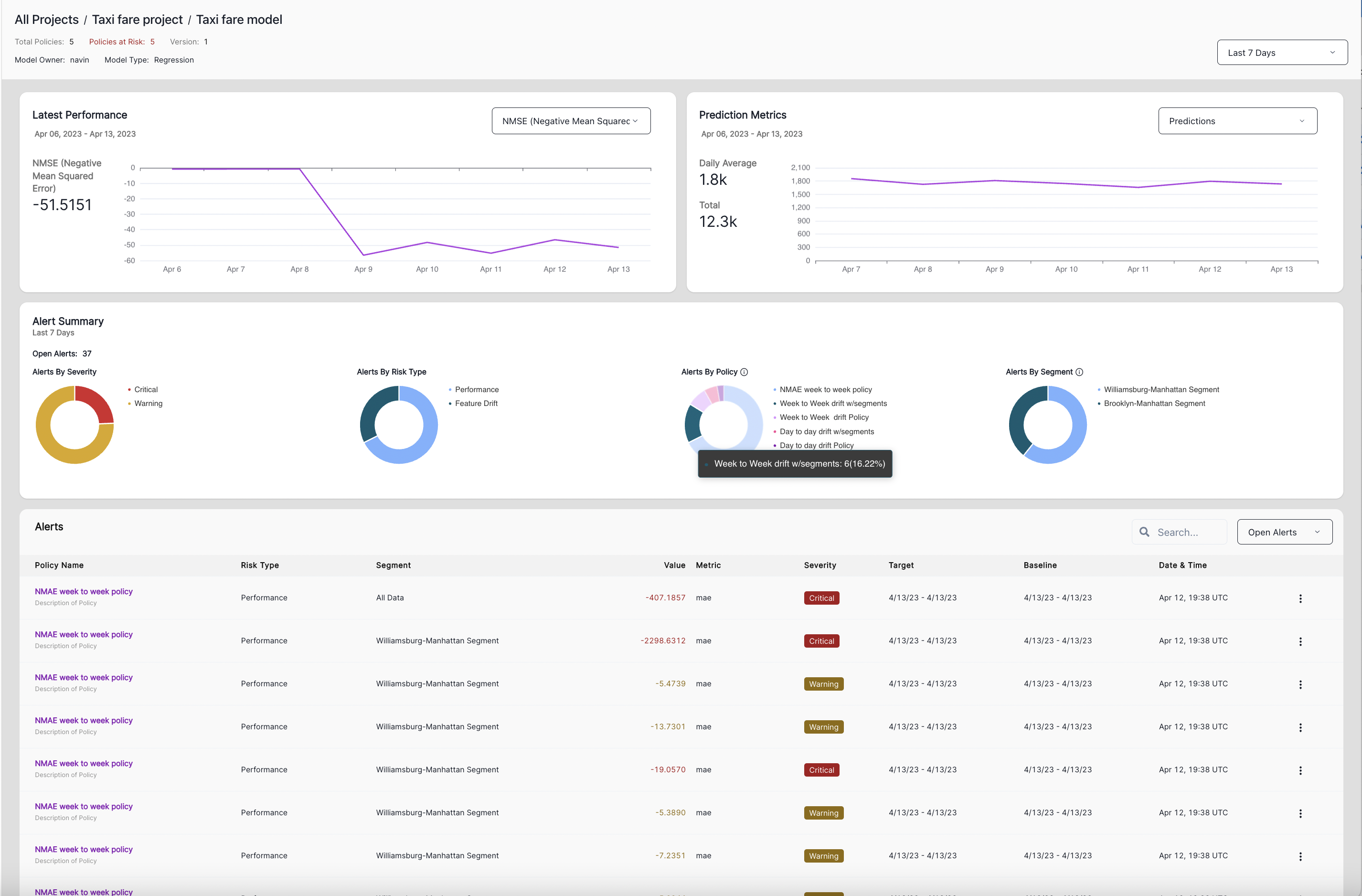

The latest iteration of the VIANOPS monitoring platform is engineered to empower data scientists and MLOps teams to manage complex, feature-rich, high-scale ML models that make their businesses run.

What do we mean by “high-scale?” It’s not necessarily about the number of models, although it could be. Instead, our definition of high-scale relates to the complexity of an individual model to properly and confidently monitor all of the details that might cause model performance to drop below what is acceptable.

VIANOPS can monitor models with tens of thousands of predictions per second, hundreds of features and segments, and subsegments, with millions or billions of transactions, across multiple time windows, to find and solve problems degrading model performance.

VIANOPS monitors three critical assets:

-

- Input Data – the data used for the prediction

- Output Data– the prediction or target of the model

- Ground Truth Data – the real-world data used to compare with the output to determine the prediction’s accuracy

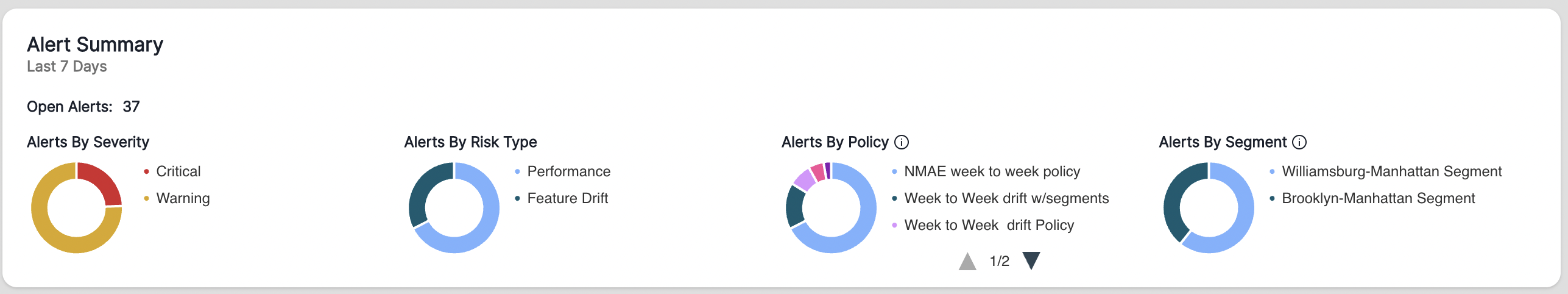

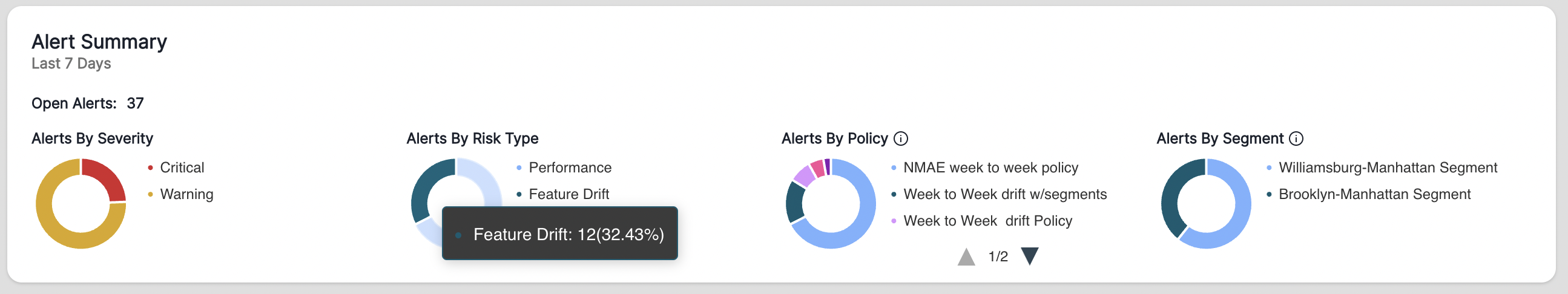

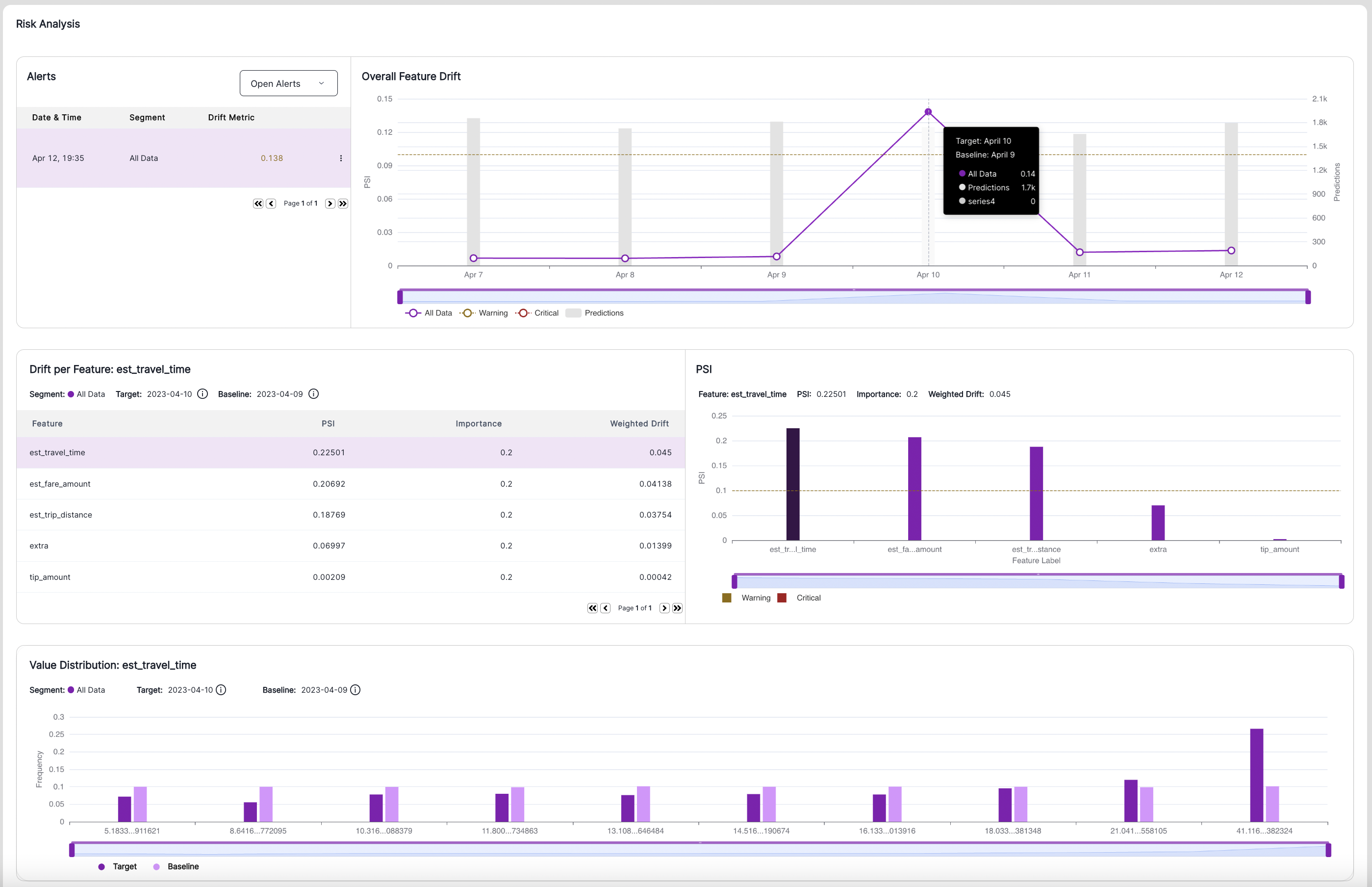

Based on the changes (or drift) in the distributions of the input data, predictions from the model, or the ground truth data, VIANOPS determines when there are significant changes in any of these. VIANOPS can analyze these differences and compare them to either the training data or any production data seen in previous time horizons (e.g. last week or last quarter). Users can also set parameters for the percentage of variation that is acceptable. If the distribution drift goes outside these threshold parameters, smart alerts are triggered to investigate the cause, and to retrain and deploy a better model. This detection and retraining can also be automated using VIANOPS APIs and Python SDK.

Once an ML model is in production, drift risks are inevitable as the models’ training data will differ from real-world data over time. VIANOPS features robust capabilities, including Root Cause Analysis, which can identify patterns at extreme scale in various spliced datasets (arbitrary segments of data) to monitor for minuscule changes and help data scientists and ML engineers take mitigation actions before the model degrades significantly and produces incorrect outcomes.

No one is better at this level of scale and complexity.

Low Cost

Another key VIANOPS differentiation is scale at low cost. Our customers can monitor the most complex of AI models – today’s and future types of models (including Large Language Models /LLMs) – and do this without expensive new infrastructure in order to get the scale. This is key to ensuring AI is reliable, and accessible for all types of enterprises that want to take advantage of the benefits but don’t have dramatically larger budgets than before.

VIANOPS is here for enterprises looking to affordably scale their ML operations. For example, if a model does billions of transactions daily, VIANOPS can monitor, splice, and analyze massive amounts of data with scalability.

AI Model Evolution

What was once the land of tabular data-based models has expanded at break-neck speed to include many other types of models including Large Language Models (LLMs), Generative AI models, computer vision models, and many other model types.

On improving employee productivity specifically, LLMs and GAI seem to be driving a desire for radical productivity improvements at scale across an organization, in every role – no longer just in specialized roles where knowledge of AI and AI systems is needed, but for every worker (sales, marketing, HR, finance, legal, and so on).

As these models rapidly descend on the enterprise AI landscape, a new kind of monitoring capability is necessary in order to monitor the performance and reliability of these new, highly error-prone types of models. They hallucinate, learn and perpetuate biases and otherwise introduce a host of ethical concerns and risks for an enterprise.

As more and more companies rely on complex ML models as core to their business processes, the need to monitor and manage the performance of these models becomes increasingly important.

Best-in-Class User Experience

The need for model monitoring is indisputable but what also sets VIANOPS apart from other monitoring platforms is incredible design. Good design is key to invoking trust in the system and technology and ease-of-use is imperative when it comes to collaboration at scale across the enterprise.

Ease of Integration into Any Landscape

Easy, secure integration is critical to enterprises as machine learning data is very sensitive and enterprises don’t typically like to release their data to the public cloud. Integrating how businesses can make information secure while automating processes is essential because every enterprise has a different setup and is in another cloud. Since every company has its own structure, platforms like VIANOPS integrate their product into the company’s workflow.

VIANOPS allows for seamless integration for various APIs including:

-

- Various data sources like streaming and file systems

- Other MLOps platforms like AWS Sagemaker and Databricks

- Collaboration and communication tools like Slack and email

By providing monitoring and alert capabilities beyond clouds and data sources, VIANOPS is an all-in-one platform to observe a variety of data points and technologies to help users save time and energy for higher-level tasks.

Key Capabilities of the VIANOPS Platform – from MLOps to LLMOps

- Monitoring: Monitor AI/ML models across multi-faceted dimensions, tens of thousands of inferences per second, hundreds of features.

- Root Cause Analysis: Run on-the-fly, ad-hoc analyses and deep dives to find patterns in millions of data points.

- Mitigation: Mitigate risks, trigger automated workflows to solve problems, know when to retrain models.

- Validation: Validate a new version after retraining, or a challenger model before promoting to champion.

Looking Toward the Future

The updated VIANOPS platform is built to support companies that run ML models as core to their business operations, have highly complex models, and require scale. The rapid acceleration of enterprises that rely on ML models as core to the entire business model is a new element in today’s landscape. This means not only models running in support of various processes but models that run the business, operationalizing the goods or services themselves.

Sign up for a free 30-day trial to see how VIANOPS can take your business to the next level or ML monitoring and observability for every stage of the ML lifecycle.

And reach out with questions or if you would like to get in touch to learn more about how we can help your business’s ML operations!