Introduction

Language Models (LMs), particularly Large Language Models (LLMs) have gained recent attention for their ability to generate natural language responses, content and text in interactive experiences that haven’t been possible before. The potential for increased productivity and improved user experiences in domains such as marketing, customer support, chatbots, and content generation became immediately clear. Whether for idea-generation, content-generation, research assistance, conversational experiences, or just as a faster way to get something started, or something done, LLMs have the potential to transform how people work in a wide variety of job roles and industries.

However, as with any new technology, especially one that has accelerated beyond the understanding and explainability of most people, bringing LLMs to the enterprise presents significant risks when applying this new technology to mission-critical aspects of any business.

There are generally two main areas that LLMs present the most risk (financial, reputational) for a business.

One significant risk is the generation of hallucinatory or fictional content. LLMs have the ability to generate text that may appear realistic, but it is important to recognize that these models are based on patterns learned from large datasets rather than actual understanding or knowledge. The risk of hallucination arises from the limitations of LLMs in distinguishing between factual information and fictional content. LLMs can generate text that sounds convincing but lacks verifiable accuracy. This can lead to the dissemination of misinformation or the creation of fictional narratives that may be misleading or deceptive, or lead to risky business decisions based on false information.

There are also other big risks when using LLMs for business purposes like potential for biased, offensive or inappropriate outputs, the leakage of private and confidential information, or jailbreaking of prompts. These risks can harm a brand’s reputation, violate ethical standards or cause significant financial impact to the business.

To address these risks, businesses need to exercise caution and implement measures to ensure responsible and ethical use of LLMs. These measures might include an array of guidelines such as corporate AI policies, legal reviews of certain usage, and other guidelines.

In addition, foundational to responsible and ethical use of LLMs is monitoring the model itself – specifically, implementing monitoring systems to detect and mitigate biases, misinformation, and offensive content generated by LLMs.

In this blog, we will use hila, a financial research assistant (or AI chat assistant for investor and finance professionals), as an example to show how LLMs can be monitored to safeguard the appropriate, responsible and ethical usage of LLMs for business users.

hila is a powerful question-answer engine for financial research (including both unstructured and structured data) developed by Vianai. hila leverages LLMs to radically accelerate financial research by answering questions about the financials of public companies, through documents such as 10-Ks and earning transcripts, in just a few seconds. This saves users hours of time that would otherwise be spent studying these documents manually to find insights.

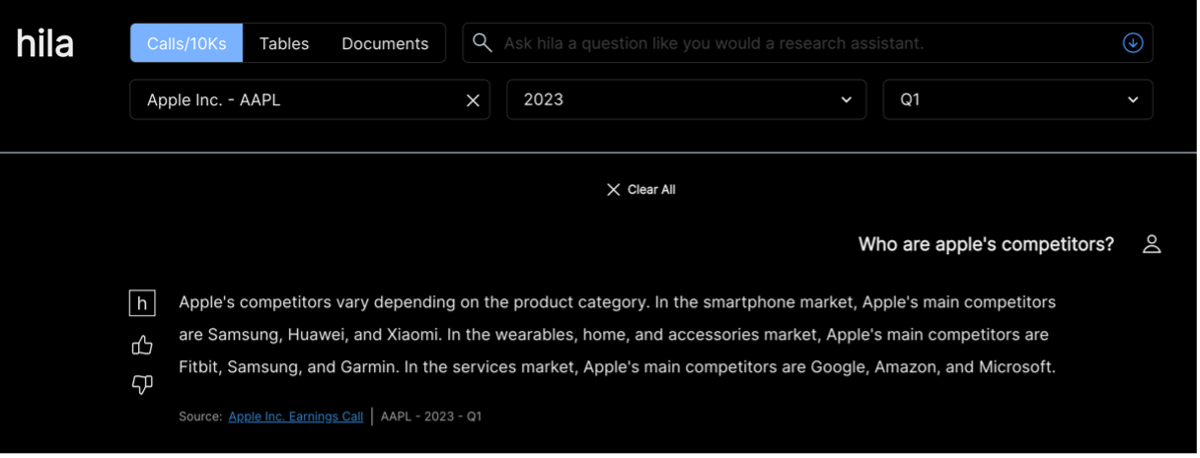

In the picture below, a user asks hila about Apple competitors based on its Q1 earnings transcript, and hila instantly provides a natural, human-language answer.

The user asks hila about Apple competitors against its Q1 earnings and gets a human readable answer in seconds.

To make sure hila continues to perform as expected, and to support Vianai’s Zero Hallucination™ approach – even when user behavior and interests change – we monitor the model to ensure that the responses generated by hila are not hallucinations, nor offensive, and to ensure users are not asking biased or inappropriate questions, as well as to understand any changes in questions users ask about different companies. To do this, we use VIANOPS, an AI and ML model monitoring platform developed by Vianai.

Overview of the example

In this example, we will discuss monitoring the LLM model in hila, using VIANOPS. The data used for monitoring consists of user questions asked to hila over a two-month period from March to April, as well as the responses from hila. VIANOPS is equipped with monitoring policies to track various aspects, including the companies that users inquire about, question length as a representation of user behavior, semantic similarity, topic analysis of questions over time to identify shifts in interests, and potential issues such as hallucination and the presence of confidential or privacy-related information. VIANOPS triggers alerts to the application owner and hila data scientists when any metric exceeds a predefined threshold.

VIANOPS is equipped with monitoring policies to track various aspects, including the companies that users inquire about, question length as a representation of user behavior, semantic similarity, topic analysis of questions over time to identify shifts in interests, and potential issues such as hallucination and the presence of confidential or privacy-related information.

Transforming natural language data into measurable data

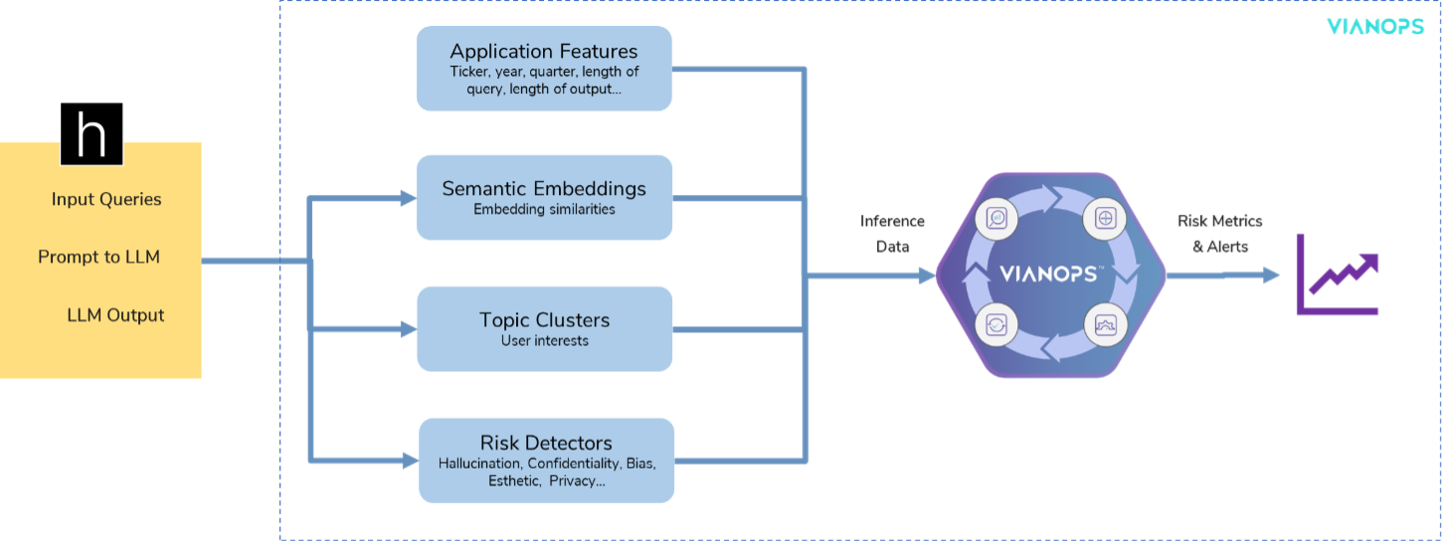

To effectively monitor a Large Language Model (LLM) that operates on unstructured natural language data, VIANOPS automatically converts this unstructured data into measurable and quantifiable data that can be analyzed. For this, hila provides VIANOPS with input and output data from its underlying LLM, as well as application-level features. VIANOPS then transforms this data into different feature categories suitable for monitoring purposes. These categories include:

| 1. | Application-related features: These features encompass information such as the company code (ticker), year, and quarter of user input or LLM output. VIANOPS also calculates statistics like question length to provide additional insights. |

| 2. | Semantic embeddings: VIANOPS leverages the LLM’s embeddings to transform user input or LLM output into high-dimensional vectors, facilitating the computation of similarities. This aids in detecting the diversity of user interests and ensuring hila meets user expectations. |

| 3. | Topic clusters: Using unsupervised clustering techniques, VIANOPS identifies the topics associated with each user input. This analysis enables a deeper understanding of the shift in user interests and whether hila is effectively addressing the intended questions. |

| 4. | Risk features: This crucial set of features focuses on detecting the appropriate usage of the LLM. It includes: |

| a. Hallucination: Identification of non-factual information generated by hila. b. Confidentiality: Detection of any confidential information present in user questions or responses. c. Privacy: Identification of any privacy-related information in user questions or responses. d. Bias, Toxicity, or Offensiveness: Assessment of response bias, toxicity, or offensive content. e. Security: Detection of prompt hijacking or security-related concerns. |

One input and output of LLM is represented by different categories of features including application-level features, embedding features, topic cluster features as well as risk related features. We can monitor all these features in VIANOPS.

Monitoring data drift in VIANOPS

hila periodically sends its data to VIANOPS for monitoring. Upon receiving the data, VIANOPS transforms it into the aforementioned feature categories and ingests it into the monitoring system. To monitor the data effectively, a collection of monitoring policies is created, targeting different aspects of hila. The flexible configuration allows for customized policies with varying subsets of features, target windows, baseline windows, drift measurement metrics, alert thresholds, and execution schedules. Some policies even offer more granular monitoring by segmenting the data based on ticker symbols to track shifts in user interest regarding popular companies like Apple and Tesla.

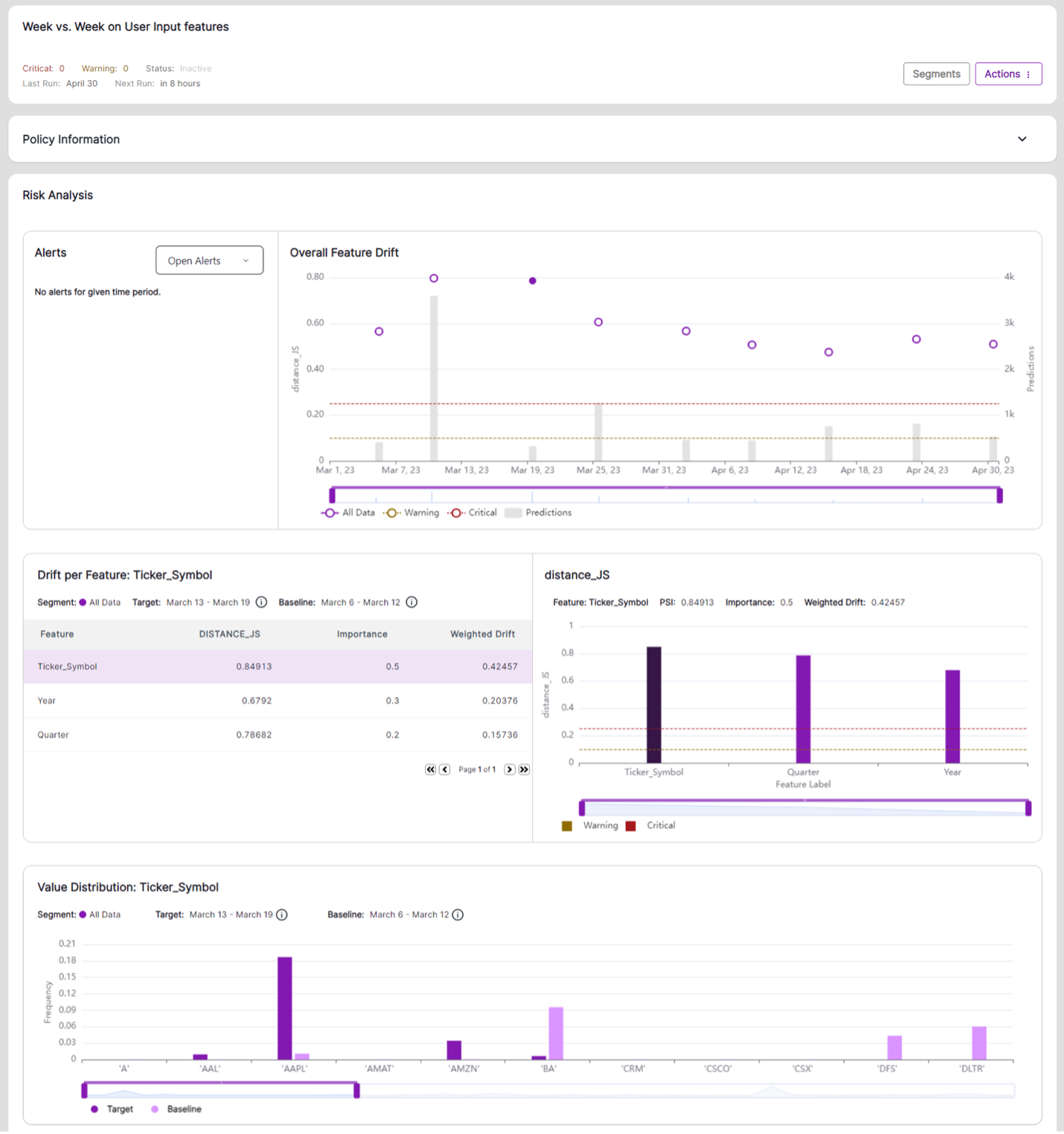

For instance, one of the monitoring policies focuses on tracking the weekly preferences of users in terms of the companies (ticker) they inquire about the most. It is intriguing to observe that each week exhibits a preference for specific companies. For example, during the week of March 13, there was a surge in questions about Apple and Amazon, whereas the previous week saw a higher volume of inquiries related to BA, DFS, and DLTR.

The week-over-week drift in ticker shows users had very different interests on the companies: in week of Mar.13th, users asked lots of questions on Apple and Amazon, while in the prior week, main questions were to BA, DFS and DLTR.

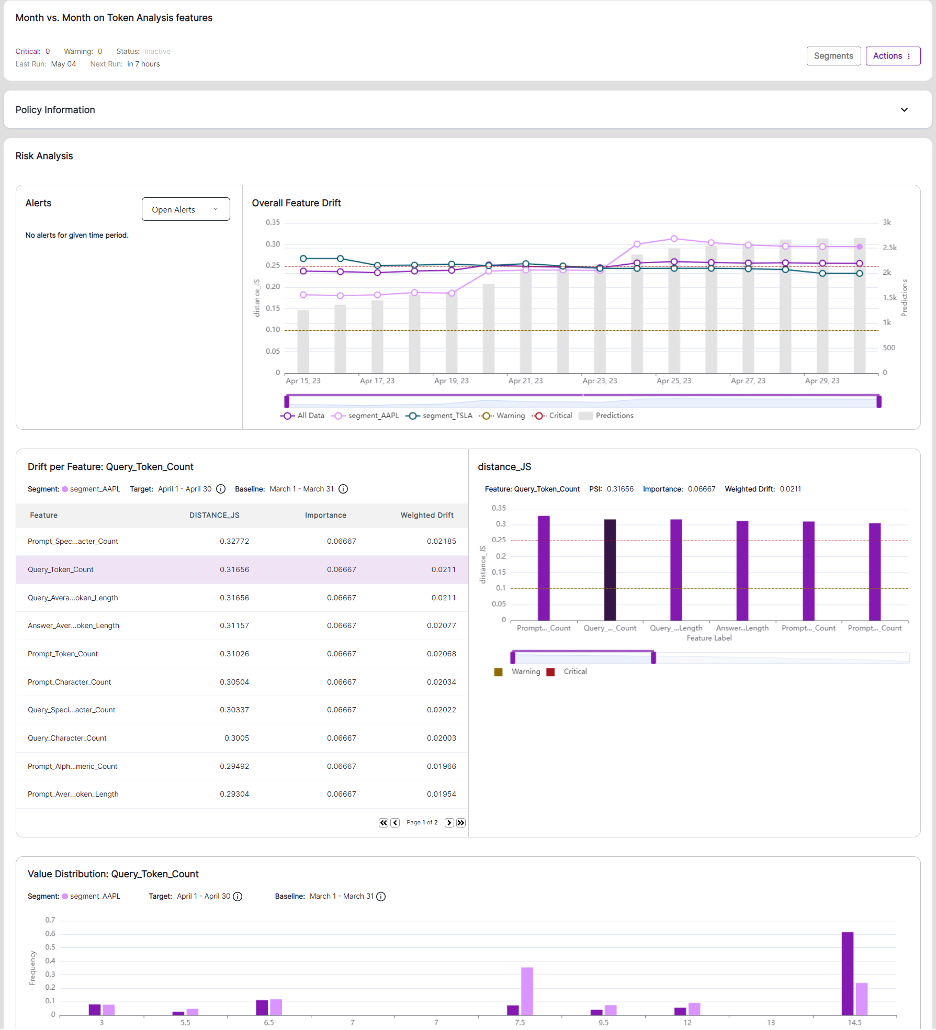

Another policy aims to monitor changes in user behavior by measuring the length of questions directed at hila concerning Apple and Tesla, which are defined as distinct segments. The question length can be correlated with the performance of the LLM, as more informative and precise instructions often result in lengthier queries and improved responses. The provided screenshot illustrates that, in comparison to March, users asked lengthier questions regarding Apple’s financials and business performance in April.

The month-to-month drift shows comparing to March, the length of user queries to Apple in April is getting longer.

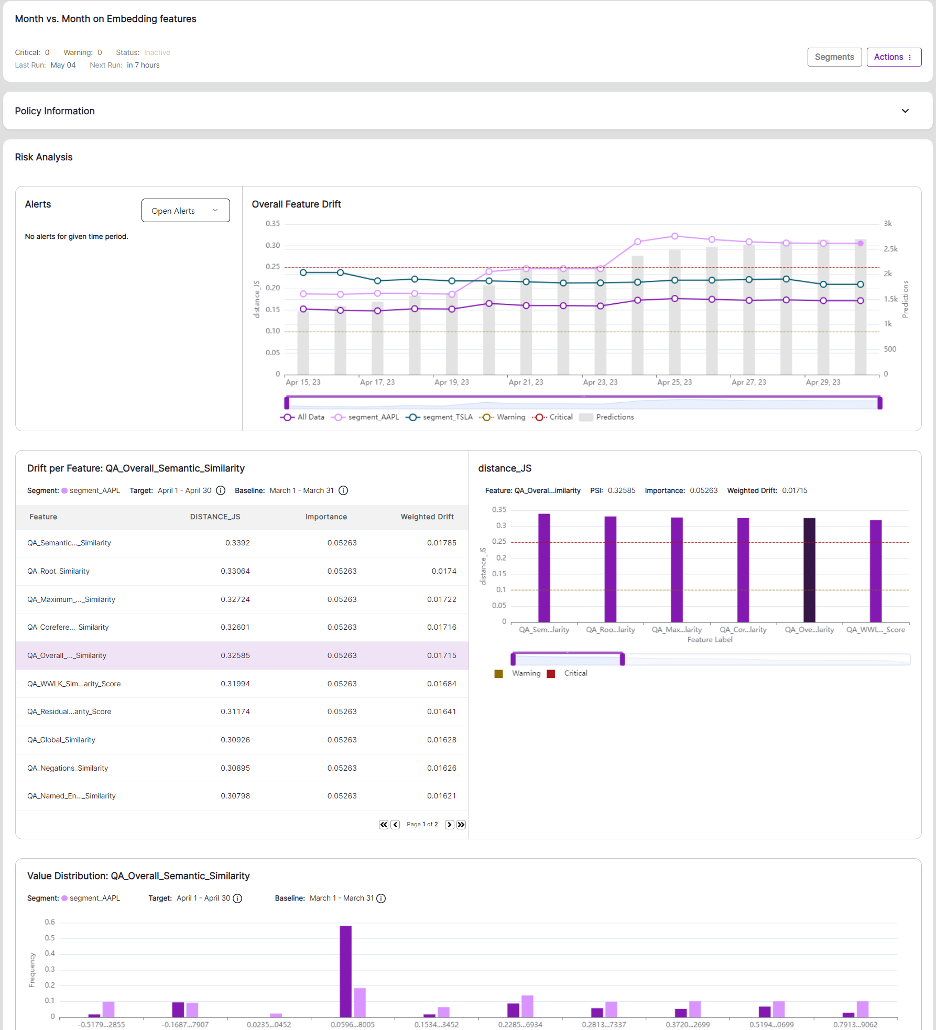

The third policy centers around monitoring the similarity or diversity of user queries on a monthly basis. Similar to the second policy, this monitoring policy also focuses on Apple and Tesla. As depicted in the screenshot, the overall semantic similarity of queries in April appears lower than that of March. In other words, users seemed to ask more similar questions in April while exhibiting a more diverse range of inquiries in March.

The monitoring shows comparing to March, the overall semantic similarity of queries in April is low. In other words, users were asking more similar questions in April while more diverse question in March.

Conclusion

Monitoring language models is still at its early stage, and researchers and companies including Vianai are actively exploring different possibilities. This example demonstrates one of the ways VIANOPS can be used to effectively monitor a large language model. VIANOPS provides data scientists and machine learning engineers with a suite of highly flexible tools that they need to define and execute drift monitoring of both structured and unstructured data in their own unique business setting.

It is important to remember that monitoring a machine learning model in production is just as important as training a good model. While we may benefit from the unparalleled business value provided by large language models, using any model without rigorous monitoring and continuous refinement could cause significant financial or reputational harm.

If you would like to learn more about monitoring LLMs in your enterprise, get in touch here.

If you would like to try VIANOPS free, sign up here.